Overview

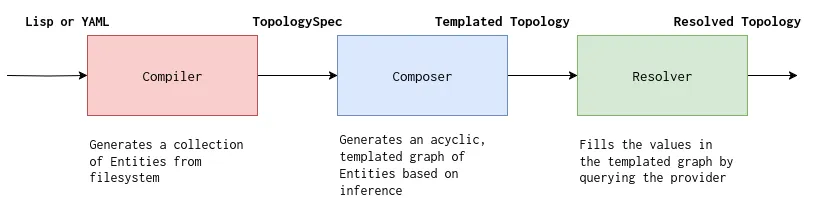

tc has 3 core internal modules that provide the required datastructures to build sophisticated workflows.

- Compiler

- Composer

- Resolver

We can test this out in the command line:

cd examples/states/basictc compile | tc compose | tc resolve --sandbox yoda --profile devThe input to the compiler is a shallow definition of entities in YAML or LISP. LISP interpreter is still being worked on and will eventually be the default input language. The compiler also walks the topology directory for functions or fetches any remote functions and interns them. The job of the compiler is to make sure the entity definitions are valid. The compiler’s output is cloud-agnostic.

The composer builds a DAG of these entities and based on the configured provider, figures out the connectors and shims. For example, how a queue is composed with a state (stepfn) or how lambdas are composed together. The composer also generates ASL for orchestrating the functions using stepfn.

The output of the composer is a templated topology (in JSON) that can be rendered on any sandbox or account.

The output of the resolver is a self-contained topology in JSON containing all the generated infrastructure boilerplate, references (arns, ids), versions of all sub components, entity-component relationships etc. We can then use this topology for deployment and other workflows.

tc create --sandbox yoda --profile --topology <TOPOLOGY.json>If we don’t specify topology, tc implicitly does compile, compose and resolve. This is true for all other workflow commands - invoke, snapshot, tesst, delete, update etc