ETL

The goal of this example is to introduce you to defining and composing functions of different kinds: Runtime, different dependency packing mechanisms. It is also to introduce us to tc’s sophisticated builder.

The pipeline

- The pipeline has 3 functions - Enhancer, Transformer and Loader.

- Notify on completion of the ETL process

- Host a simple html page to show the notifications.

Composition

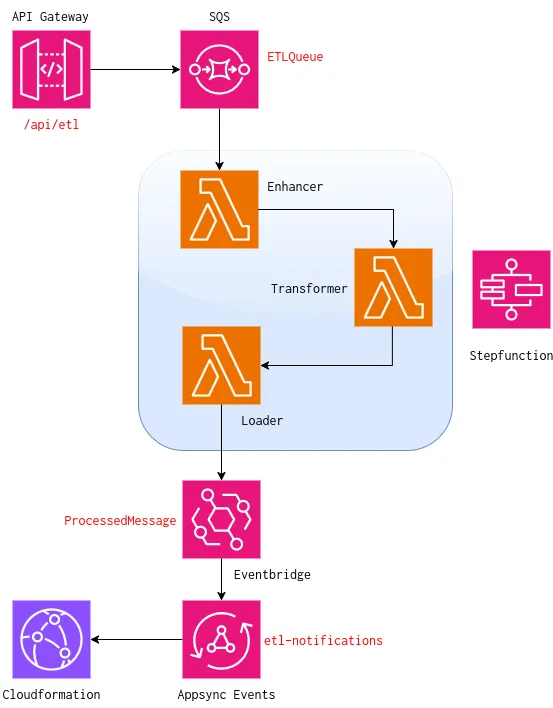

Section titled “Composition”If you were to ask an architect or Chat GPT, we may get an architecture something like this:

Can we define this topology at a high-level without knowing anything about the underlying services ? It’s possible with tc.

Let’s break it down and incrementally design the topology:

Step 1 - Async API

Section titled “Step 1 - Async API”name: etl

routes: /api/etl: method: POST async: true function: enhancerThe enhancer function implementation is irrelevant for the sake of this example. It could be written in Ruby, Python, Clojure or Rust. Let’s assume there is a function directory called enhancer with some piece of code.

Let’s create a sandbox called yoda with dev AWS_PROFILE.

tc create -s yoda -e devStep 2 - Queue Requests

Section titled “Step 2 - Queue Requests”name: etl

routes: /api/etl: method: POST queue: ETLQueue

queues: ETLQueue: function: enhancerQueue may not be strictly necessary. However, in this context, the requirement is to preserve the order of requests. By default, tc configures a FIFO queue.

Now update our yoda sandbox

tc update -s yoda -e devStep 3 - DAG of functions

Section titled “Step 3 - DAG of functions”name: etl

routes: /api/etl: method: POST queue: ETLQueue

queues: ETLQueue: function: processor

functions: enhancer: root: true function: transformer transformer: function: loaderThe enhancer, transformer and loader functions need not have any input/output transformation code. By specifying a DAG of functions above, tc composes and generates the ASL required for stepfunctions. We can inspect the generated ASL by running.

tc compose -s states -f yamltc update -s yoda -e devStep 4 - Notification

Section titled “Step 4 - Notification”name: etl

routes: /api/etl: method: POST queue: ETLQueue

queues: ETLQueue: function: enhancer

functions: enhancer: root: true uri: ./enhancer function: transformer transformer: function: loader event: ProcessedMessage

events: ProcessedMessage: channel: etl-notifications

channels: etl-notifications: authorizer: defaultThe loader function emits an event ProcessedMessage. tc takes care of structuring the payload and using the right event pattern. Eventually, the event triggers a websocket notification via channels - Appsync event channel.

tc update -s yoda -e devStep 5 - Webpage

Section titled “Step 5 - Webpage”name: etlroutes: /api/etl: method: POST queue: ETLQueue

queues: ETLQueue: function: enhancer

functions: enhancer: root: true uri: ./enhancer function: transformer transformer: function: loader event: ProcessedMessage

events: ProcessedMessage: channel: etl-notifications

channels: etl-notifications: authorizer: default

pages: app: dist: . dir: webappHere we have it! With just few lines of abstract definition, we got an end-to-end ETL pipeline working with almost no infrastructure code.